It’s a problem familiar to financial crime and compliance teams worldwide. Multiple siloed case management systems don’t provide the single source of truth analysts need to work productively. Information is hard to find and spread across multiple pieces of software. All the while, backlogs are continuing to build.

At ComplyAdvantage, our case management solution, built in-house based on more than a decade of experience in the compliance space, is designed to address these challenges with minimal training.

In this article, we’ll explore the art and opportunity of case management for financial crime risk management in general and AML in particular and explain how our approach to this challenge improves outcomes for analysts, team leads, and Money Laundering Reporting Officers (MLROs).

This article is part of a series on the capabilities of the ComplyAdvantage Mesh platform. Rather than being a dedicated case management solution, Mesh is designed to provide a 360-degree view of risk in a single, modular platform. Links to the rest of the series are available at the end of this article.

What do we mean by case management for fincrime and compliance?

Case management in the AML and financial crime world refers to the software utilized by the compliance team for efficient remediation. It is used to view and investigate risk alerts, assess potential risks, document their observations and decisions, or escalate if needed. How the platform is used varies by job role. Analysts, for example, typically use our platform to make it easier to clear a large volume of cases quickly and accurately. Team leads use it to manage case backlogs while ensuring high-risk cases are dealt with as a priority. At the same time, a compliance leader or Money Laundering Reporting Officer (MLRO) uses it to put the company’s risk policy into practice.

The ComplyAdvantage Mesh platform offers a holistic case management solution across customer screening, company screening, and ongoing monitoring. This will also include payment screening and transaction monitoring very soon.

Common AML case management challenges

When speaking to financial institutions, we hear four common frustrations with AML case management software:

- Poor analyst productivity.

- Inability to make efficient risk decisions.

- Ever-increasing case backlogs.

- Lack of preparedness for auditor scrutiny.

We believe five root causes are driving these challenges:

- Remediation information is spread across multiple systems.

- Audit trails are missing or not detailed enough.

- Analysts are spending too long searching for context on previous remediation decisions.

- Workloads are presented without an order or prioritization.

- Many AML case management systems don’t offer flexible, no-code configurability.

How ComplyAdvantage solves common case management problems

Case management in ComplyAdvantage Mesh has been built to serve all the key functions within an enterprise compliance team. From the ground up, we set the following goals for our solution, aligned to key roles within the compliance team:

| Financial crime analyst |

Team lead |

MLRO/Head of Compliance |

- Clear cases quickly and efficiently.

- Document the rationale behind decisions.

- Highlight high-risk cases with confidence.

|

- Manage case backlogs and easily delegate work.

- Prioritize high-risk cases.

- Manage team performance and identify issues.

|

- Reduce friction in customer processes caused by slow compliance work.

- Ensure compliance processes are followed and the firm is always audit-ready.

|

Here are five key benefits of our AML case management solution that deliver on this promise to our customers:

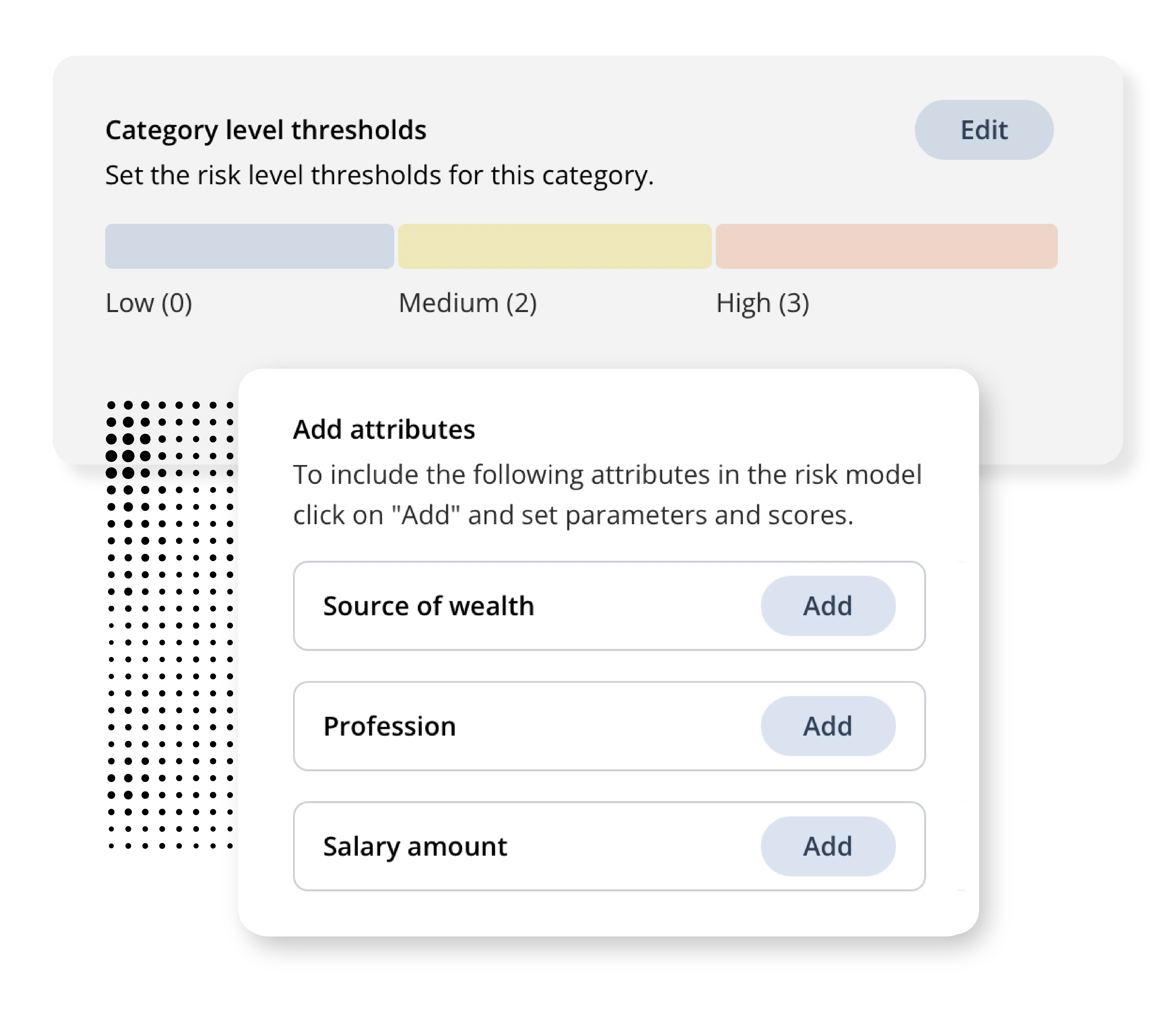

1. Manage customer risk scores flexibly

Most case management solutions will offer some kind of low/medium/high-risk taxonomy, but how flexible are those categories? Can they be quickly aligned to a firm’s specific risk-based approach? What risk types are offered? The ComplyAdvantage Mesh case management platform provides a range of risk types, including four politically exposed person (PEP) categories and adverse media options such as property, fraud, financial, narcotics, and violence. Risk models can be set up for companies and people, with scores automatically updated based on insights from the screening and monitoring platforms.

(Learn more about risk scoring in Mesh here >>)

2. Expedite case management with bulk decision-making

Tackling alert backlogs effectively is impossible without bulk decision-making. ComplyAdvantage offers several bulk actions. These include the ability to bulk assign screening results when the status can be determined without the need to click into profile information. Where analysts have reviewed multiple cases and decided on the risk level, they can ‘select all’ and apply the same decision.

3. Improve remediation efficiency with notes

Leaving easily accessible notes with cases is a powerful way of ensuring a firm is always audit-ready and enabling efficient case remediation. The ComplyAdvantage case management platform ensures all notes are time-stamped and can be added to open or closed cases. Notes can also be added to multiple cases simultaneously. Contextual notes can also be added to a user assignment or case decision to provide additional clarity. Notes are mandatory before submitting case decisions, ensuring precise and consistent audit trails.

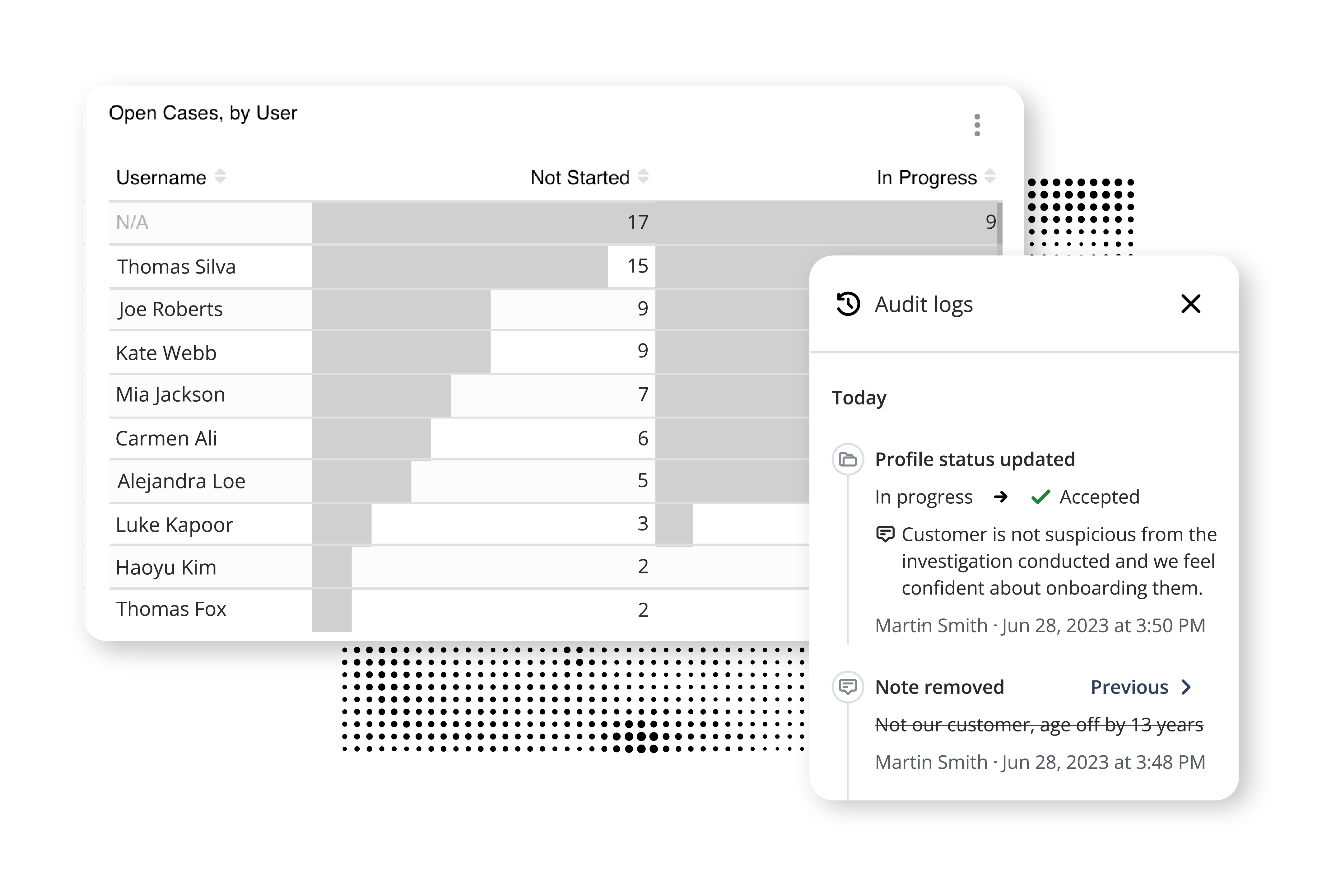

4. Ensure continuous audit readiness

An audit log is only as good as it is accessible, and this is a key feature of the ComplyAdvantage case management platform. Audit logs are visible from the case screen for easy accessibility. This includes viewing all the changes to a case’s status and notes from analysts. Logs are time-ordered and include customer creation, updates, risk score calculations, screening and rescreening, and the users who took those actions.

5. Create and track case stages via webhooks

ComplyAdvantage Mesh allows third-party applications to connect and track, for example, when a case decision is made. This is critical to effectively supporting the complex compliance tech stack and informing relevant organizational stakeholders. Webhooks can be enabled for case creation, case state updates, and workflow completion.

Find out more about ComplyAdvantage Mesh by reading the other articles in the series:

Explore how ComplyAdvantage Mesh gives firms a 360-degree view of risk

Find out more about how Mesh combines industry-leading AML risk intelligence with actionable risk signals to screen customers and monitor their behavior in near real-time.

Learn more

EN

EN FR

FR