Intégration

Une approche API-first qui permet aux établissements de connecter des piles technologiques de base de manière fluide et sécurisée.

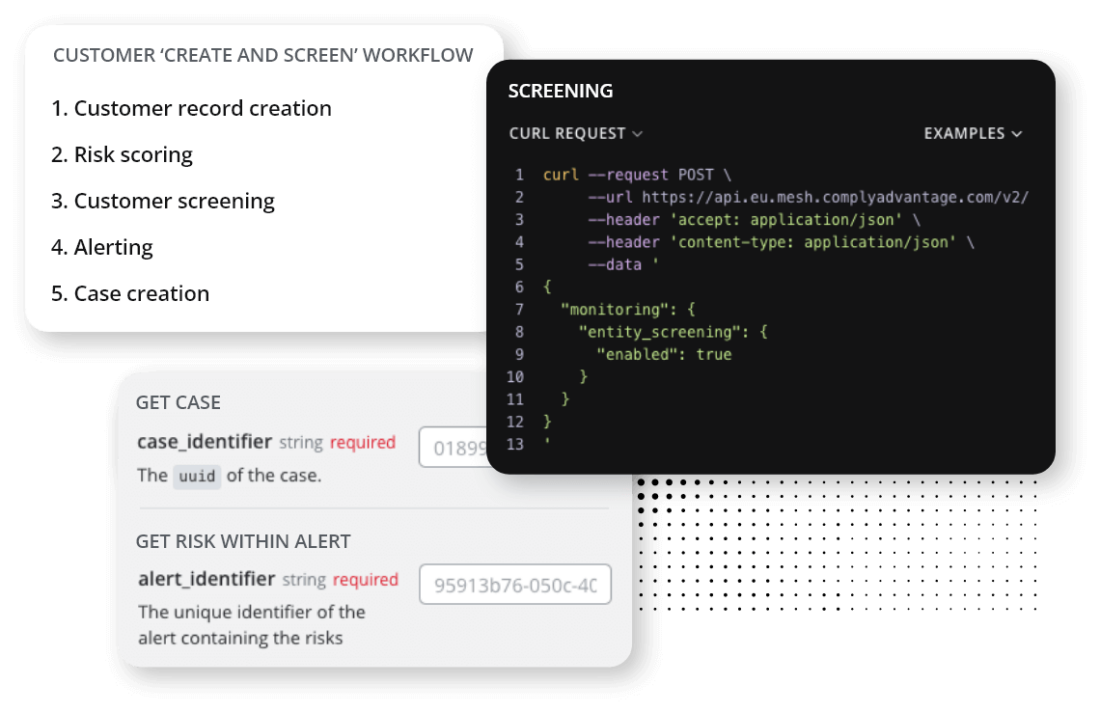

- Une architecture API-first modulaire et une API complète garantissant une intégration propre aux flux de travail opérationnels existants.

- Une expérience centrée sur le développeur fournie par des guides d’intégration, des exemples et des SDK complets.

- Un dimensionnement et une fiabilité adaptés au monde de l’entreprise et à tous les cas d’utilisation critiques.

Filtrage et supervision continue des clients

Libérez tout le potentiel de ComplyAdvantage Mesh grâce à notre API complète. Rationalisez l'entrée en relation d’affaires des clients, automatisez l'obligation de vigilance et collectez des renseignements en temps réel sur les risques, le tout grâce à une intégration sécurisée et facile d’utilisation.

Consulter la documentation APIFiltrage des paiements

Filtrez les paiements en toute sécurité tout en minimisant les problèmes pour vos clients et protégez ainsi votre établissement contre les risques, sans compromettre la satisfaction des utilisateurs.

Consulter la documentation APISurveillance des transactions

Une intégration entièrement souple et basée sur des API qui s'adapte à vos objets de données et champs définis lors de la phase de déploiement, ce qui garantit une supervision continue et efficace des transactions des clients.

Consulter la documentation APIUne intégration API-first flexible et adaptée à toutes les piles technologiques

ComplyAdvantage offre différentes méthodes d’intégration : API, SFTP ou via notre interface Web.

API-first : une API complète et modulaire

- Évitez les migrations « Big Bang » à haut risque. Grâce à notre API REST modulaire et granulaire, ComplyAdvantage Mesh fonctionne comme votre pile de conformité complète ou comme un composant de votre écosystème de conformité plus large.

- Intégrez ComplyAdvantage Mesh à vos plateformes de paiement ou bancaire de base, selon vos besoins. Tout cela est rendu possible grâce à un éventail de mécanismes d’intégration, y compris une API synchrone en temps réel, des flux pilotés par des crochets Web asynchrones et le téléchargement par lots, dont SFTP.

- Extrayez facilement vos données de conformité pour les processus en aval et le reporting.

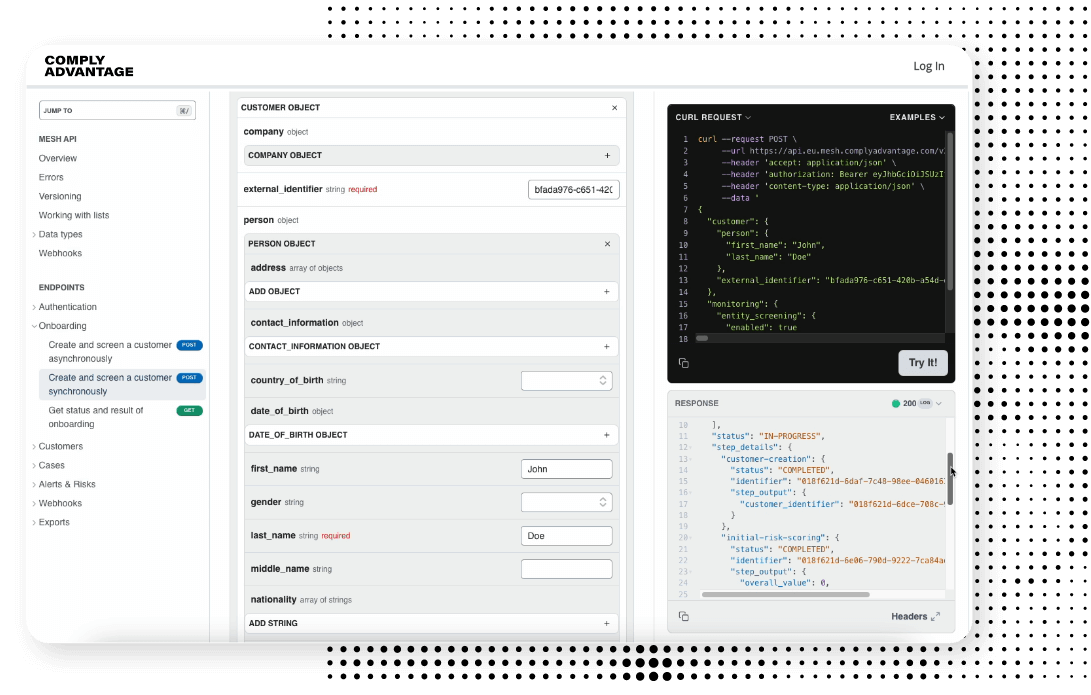

Ressources centrées sur le développeur : tout ce dont vous avez besoin est disponible au même endroit.

- Démarrez rapidement grâce à une documentation complète et interactive sur l’API.

- Appuyez-vous sur des intégrations de référence adaptées à votre cas d’utilisation et téléchargez des exemples de code directement depuis notre documentation, le tout dans la langue de votre choix.

- Procédez à une intégration directe à nos SDK pour intégrer rapidement ComplyAdvantage Mesh à vos applications.

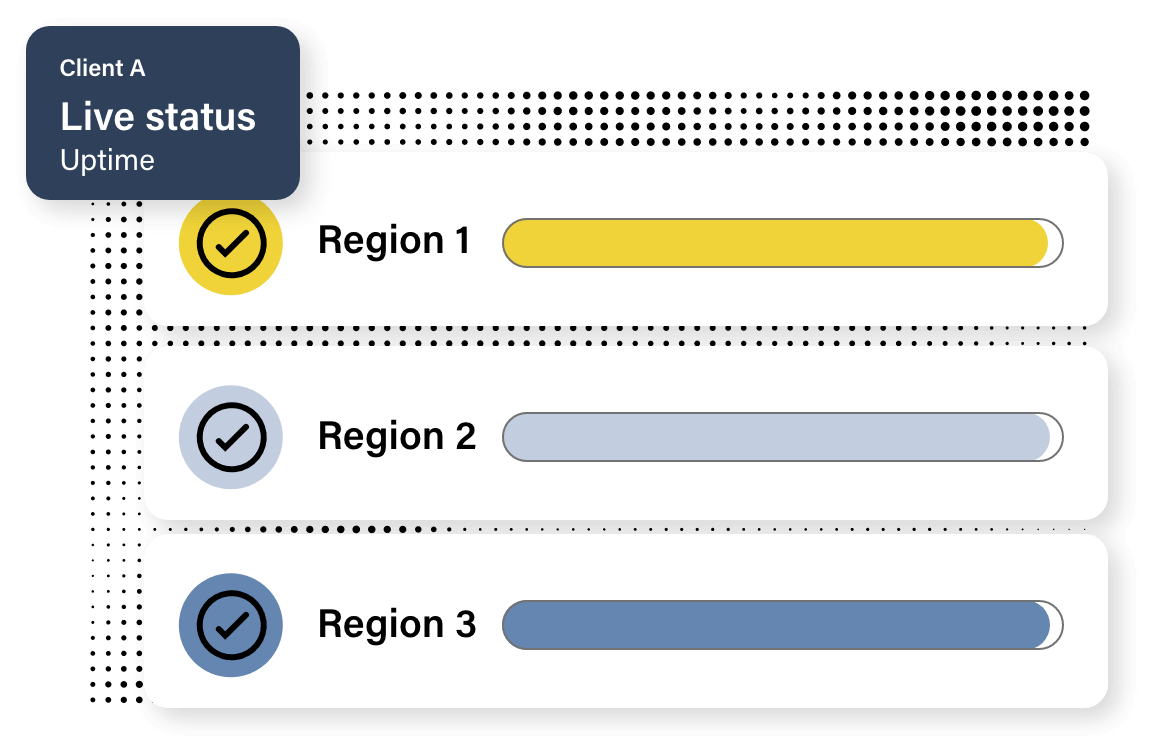

Intégration adaptée au monde de l'entreprise : adaptation à tous les cas d'utilisation critiques en termes de dimensionnement et de fiabilité.

- Profitez d’une API évolutive, hautement disponible et sécurisée qui pilote certains des plus gros établissements au monde.

- Assurez la continuité de l’activité pour les cas d’utilisation critiques grâce à la haute disponibilité offerte par ComplyAdvantage Mesh.

- Accélérez votre réseau de paiement avec des voies d’intégration en temps réel et à faible latence.

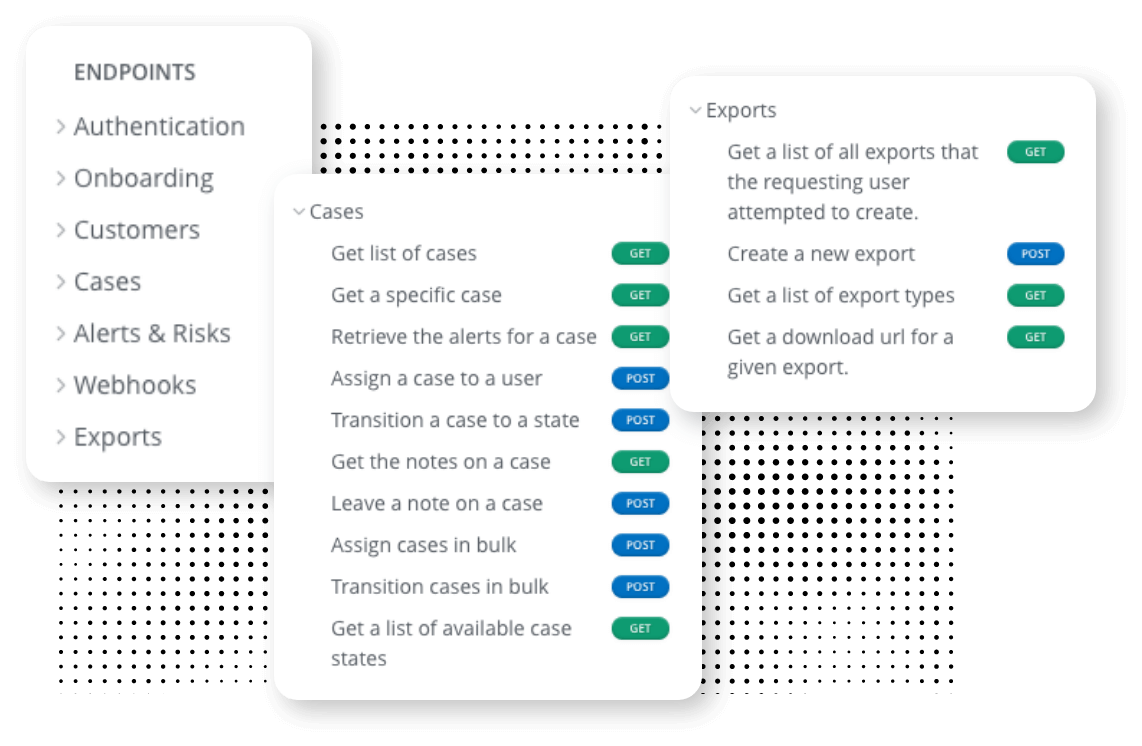

L’API ComplyAdvantage Mesh vous permet d’automatiser tout un éventail d’activités de gestion des cas parmi lesquelles l’accès à une liste de cas, l’extraction d’un cas spécifique, la récupération d’alertes liées à un cas, l’affectation de cas, la transition de cas en volume et bien d’autres choses encore. Reportez-vous à la section « Cases » sous « Endpoints » dans la documentation de l’API.

Oui. L’API de ComplyAdvantage Mesh vous permet de modifier les configurations de filtrage via l’API.

L’API de ComplyAdvantage Mesh est une API compatible REST qui est conforme à la spécification OpenAPI. Elle offre donc une interface normalisée qui facilite l’intégration à vos systèmes.

L’architecture API-first de ComplyAdvantage Mesh offre une flexibilité inégalée. Chaque fonctionnalité de la plateforme est accessible via notre puissante API, ce qui assure une intégration transparente à différents cas d’utilisation, qu’il s’agisse de flux de paiement en temps réel ou du filtrage de clients en gros volume. De plus, le support des webhooks par ComplyAdvantage Mesh garantit des notifications et des mises à jour en temps réel, ce qui rationalise l’intégration à vos systèmes et flux de travail existants.

ComplyAdvantage Mesh accorde la priorité à la sécurité et à la convivialité. Notre plateforme utilise OAuth2, un protocole normalisé qui assure une authentification robuste de l’API. En outre, Mesh s’intègre au système à authentification unique (SSO) de votre établissement pour rationaliser l’accès des utilisateurs et fournit une authentification multifactorielle (MFA) en tant que couche de protection supplémentaire.

FR

FR