Guide : Votre feuille de route LCB pour les paiements instantanés SEPA

Découvrez l'impact du passage aux paiements instantanés SEPA sur vos procédures de filtrage des clients.

Téléchargez votre exemplaireEn février 2024, le Parlement européen a approuvé de nouvelles règles visant à garantir la réception immédiate des transferts de fonds sur les comptes bancaires ouverts dans toute l’Union européenne. Outre la nécessaire actualisation des contraintes réglementaires que cela représentait pour les banques et autres prestataires de services de paiement (PSP), cette nouvelle législation a modifié le recueil de règles liées au virement instantané de l’Espace unique de paiement en euros (SEPA).

À la lumière de ces récents développements, cet article fait le point sur l’évolution du paysage des paiements dans l’Union européenne (UE) et pose les questions suivantes :

Découvrez l'impact du passage aux paiements instantanés SEPA sur vos procédures de filtrage des clients.

Téléchargez votre exemplaireSEPA est un cadre défini par l’UE pour harmoniser les paiements libellés en euros dans les pays participants. L’objectif premier du SEPA est de créer un marché plus intégré et plus efficace pour les services de paiement en Europe. Permettant d’effectuer des transactions en euros de manière fluide, cette initiative ne différencie plus les paiements nationaux des paiements transfrontaliers.

Les paiements SEPA concernent tous les paiements libellés en euros qui sont effectués entre des comptes de la zone SEPA selon des procédures et formats normalisés parmi lesquels figurent :

Un mandat SEPA est un élément-clé des paiements SEPA, en particulier pour les prélèvements automatiques. En effet, il permet au payeur d’autoriser l’émetteur de factures à débiter son compte bancaire. À l’origine, les mandats SEPA devaient être signés sur papier. Cependant, en 2012, le Conseil européen a cherché à harmoniser les systèmes de paiement en euros en adoptant le règlement (UE) n° 260/2012 qui a favorisé la transition vers les mandats numériques. Actuellement, il est possible d’obtenir l’autorisation du payeur en recourant à un processus papier ou dématérialisé. Néanmoins, la signature électronique d’un mandat SEPA est vivement encouragée en raison de son efficacité et de sa sécurité juridique.

En effet, le mandat électronique sert de preuve aux émetteurs de factures pour justifier les débits et les protéger contre toute réclamation portant sur des transactions non autorisées. Les payeurs pouvant demander le remboursement des débits non autorisés dans un délai de 13 mois, il est important de pouvoir produire une preuve solide de l’autorisation.

Les paiements, virements et prélèvements SEPA offrent de nombreux avantages aux établissements financiers et notamment :

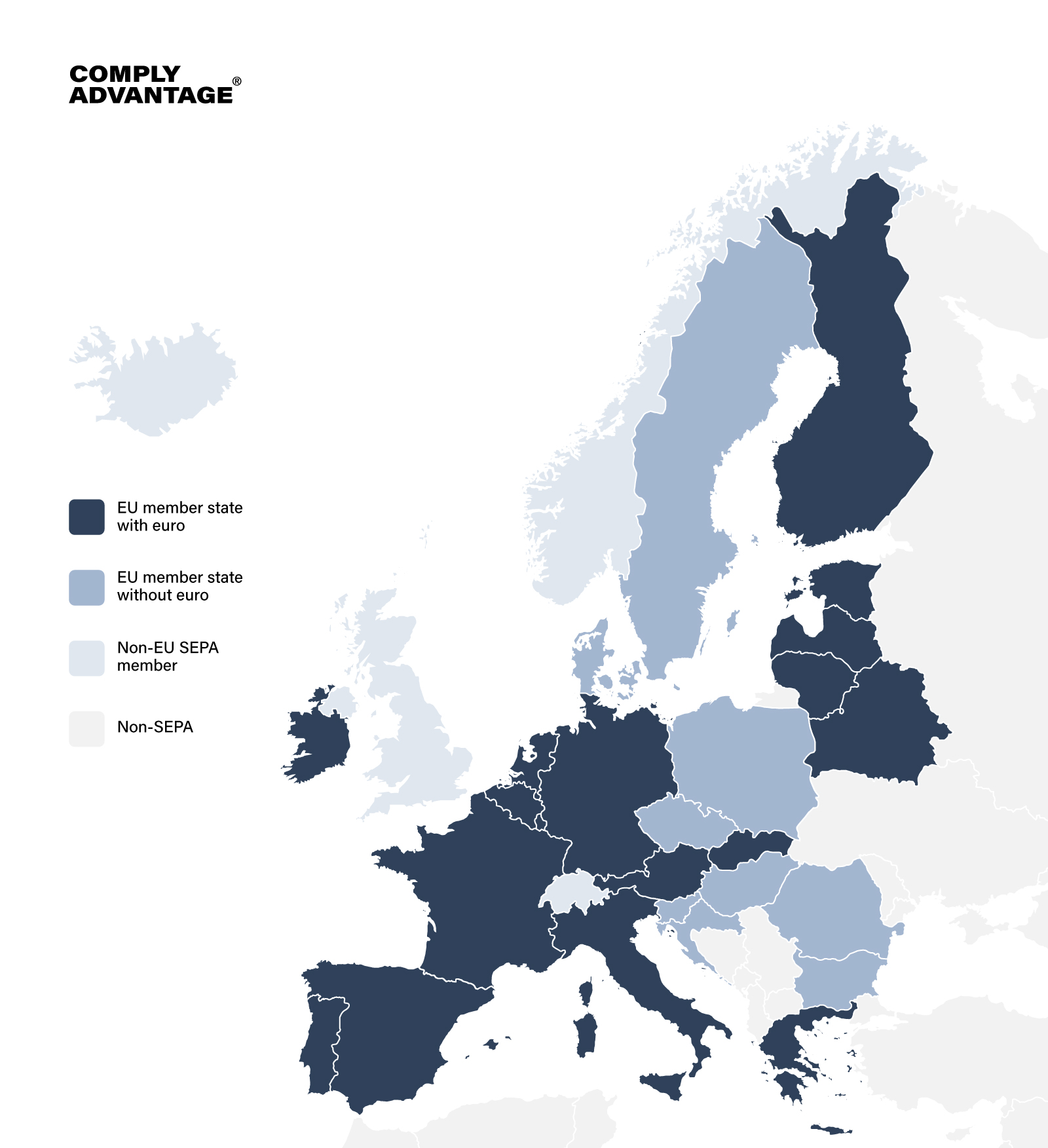

La zone SEPA comprend 36 pays dont les états membres de l’UE et d’autres pays ayant choisi de participer à ce programme. Les pays de la zone SEPA sont :

| Andorre

Autriche Belgique Bulgarie Croatie Chypre République tchèque Danemark Estonie Finlande France Allemagne Grèce Hongrie Islande Irlande Italie Lettonie |

Liechtenstein

Lituanie Luxembourg Malte Monaco Pays-Bas Norvège Pologne Portugal Roumanie Saint-Marin Slovaquie Slovénie Espagne Suède Suisse Royaume-Uni Vatican |

SEPA s’appuie sur un cadre juridique solide qui assure un fonctionnement harmonieux et la conformité dans tous les États membres. SEPA repose sur plusieurs règlements et directives majeurs, à savoir :

S’appuyant sur ce fondement juridique solide, le Conseil européen des paiements (EPC) a mis au point cinq systèmes distincts de traitement des paiements SEPA, chacun étant conçu pour répondre à des types de transactions et à des besoins utilisateurs spécifiques.

Même si trois de ces cinq systèmes sont dédiés aux paiements instantanés, le marché reste fragmenté. En fait, en 2023, seul un virement en euros sur dix environ effectué dans l’Union européenne était traité comme un paiement instantané.

Pour promouvoir les paiements en temps réel en Europe, le Conseil européen a privilégié l’instauration d’un marché des paiements instantanés totalement intégré à la région. Pour atteindre cet objectif, un nouveau règlement (Règlement (UE) 2024/886) introduit début 2024 modifie le recueil de règles SEPA concernant les virements instantanés et édicte les exigences suivantes pour les établissements financiers :

Découvrez l'impact du passage aux paiements instantanés SEPA sur vos procédures de filtrage des clients.

Téléchargez votre exemplaireL’introduction de nouvelles réglementations sur les paiements instantanés en Europe pose plusieurs défis de taille aux établissements financiers dans l’ensemble de la zone SEPA. Cela concerne notamment :

L’un des principaux défis est l’obligation de mettre en œuvre un système de vérification de l’IBAN d’ici novembre 2025. Cela impose l’unification du marché, laquelle est actuellement entravée par l’adoption par plusieurs pays de solutions différentes. Mettre en place un système unifié sur les différents marchés impliquerait :

Il reste peu de temps aux établissements qui n’offrent pas encore de services de paiement instantané pour développer et mettre en place l’infrastructure nécessaire. Les principaux défis techniques portent notamment sur :

Les établissements financiers qui offrent déjà des services de paiement instantané sont confrontés à des défis supplémentaires, à savoir :

En raison de l’actualisation continue de la réglementation de l’UE afin de normaliser les paiements instantanés transfrontaliers en euros et d’encourager leur adoption à grande échelle, les établissements seront confrontés à de nouvelles exigences dans le cadre d’un calendrier de mise en œuvre échelonné. Pour assurer leur conformité, les établissements devront notamment :

Exploiter la technologie en s’appuyant sur des solutions technologiques de pointe dont l’intelligence artificielle (IA) et l’apprentissage automatique (ML), pour gérer efficacement la conformité, l’évolutivité et les processus de filtrage. Ces technologies peuvent améliorer la précision des mesures de LCB-FT, rationaliser la vérification des bénéficiaires et renforcer les performances opérationnelles globales.

Des milliers d'organisations utilisent déjà ComplyAdvantage. Découvrez comment rationaliser la conformité et atténuer les risques avec des solutions de pointe.

Demandez une démonstrationPublié initialement 13 septembre 2024, mis à jour 16 septembre 2024

Avertissement : Ce document est destiné à des informations générales uniquement. Les informations présentées ne constituent pas un avis juridique. ComplyAdvantage n'accepte aucune responsabilité pour les informations contenues dans le présent document et décline et exclut toute responsabilité quant au contenu ou aux mesures prises sur la base de ces informations.

Copyright © 2025 IVXS UK Limited (commercialisant sous le nom de ComplyAdvantage)